In our previous blog, we explored how AI readiness isn’t about models but rather about mindset.

However, readiness alone isn’t enough. To build AI that truly creates value and earns trust, organisations must move from being ready for AI to being responsible with AI. That shift begins with one principle; AI must be responsible by design, i.e. it must be a core architectural intent and not a compliance exercise.

From Compliance to Conscious Design

For many organisations, responsibility is addressed after models are built or as a governance checkbox, revisited only when regulators come calling. By then, it’s often too late.

Responsibility in AI must be coded into the design and present in every model trained and every decision automated. Being Responsible by Design means starting with intention rather than reaction. It means asking the right questions before the first line of code is written:

- What’s the AI risk appetite of the business?

- Who could be affected by this model’s decisions, either directly or indirectly?

- How transparent is the algorithm’s reasoning?

- What safeguards exist to ensure fairness, accountability, and human oversight?

When organisations treat responsibility as a design decision rather than a compliance afterthought, they evolve from defensive compliance to strategic confidence. Responsibility stops being a constraint but rather, converts into a competitive advantage.

Fairness: Designing for Equity

AI data reflects the data that it’s trained on and may not be free from bias. There might be presence of historical inequality, incomplete representation, or systemic skew. Responsible design means acknowledging and addressing those biases before they’re amplified by automation.

Fairness doesn’t mean identical outcomes; it means equitable treatment and opportunity. It’s about designing systems that perform consistently and justly across all contexts, populations, and perspectives. A fair AI system actively expands inclusion and access. It transforms bias mitigation from a technical task into a moral and strategic imperative.

Transparency

Transparency is the bridge between trust and understanding. It lets stakeholders know how and why AI arrives at its conclusions and allows them to understand model logic and question outcomes. When users understand how AI thinks and operates, they are more likely to trust and adopt it.

Transparency isn’t just good governance, it’s good design. It empowers trust, enables accountability, and ensures that intelligence remains a tool of empowerment, not obscurity.

Accountability

Every AI system is the product of human intent and therefore, should have a human accountable for it. To be accountable by design means to set up clear ownership, oversight and traceability across the AI lifecycle. From data collection to deployment, every stage must have someone responsible for ethical and operational integrity.

Accountability turns responsibility from a policy statement into an operational reality. It reinforces the principle that AI may automate decisions, but it never automates responsibility.

ISO 42001: The Global Framework for Responsible AI

As AI systems become central to decision-making, organisations face growing pressure to demonstrate that their technologies are safe, fair, and explainable. Until recently, most have relied on internal policies or ethical guidelines to define responsible AI.

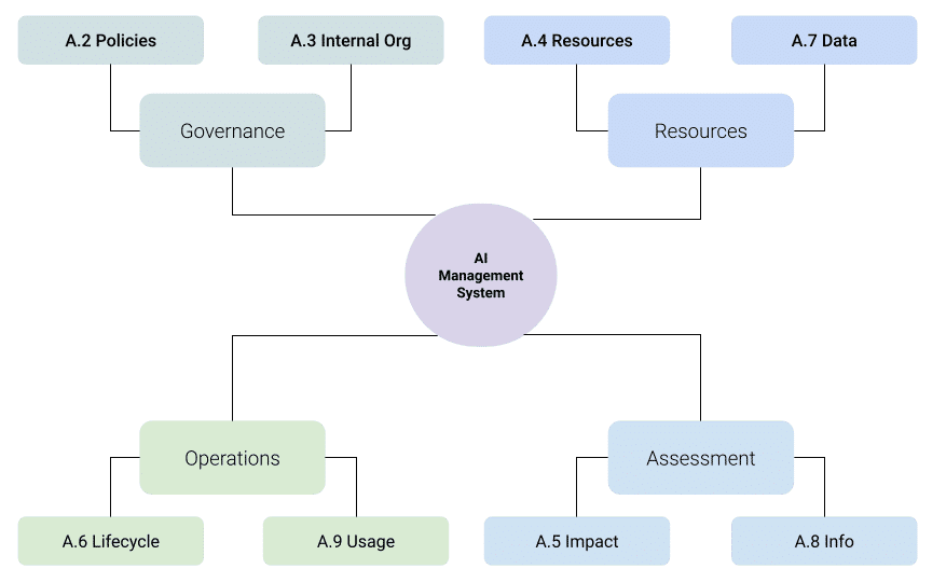

The introduction of ISO/IEC 42001, the world’s first international standard for AI Management Systems (AIMS), provides a structured approach to integrate ethics, responsibility and governance into the full AI lifecycle.

It guides organisations to:

- Govern with clarity: Define policies, responsibilities, and oversight mechanisms for AI across all business units.

- Manage risk with intent: Identify and mitigate ethical, legal, and operational risks tied to AI use.

- Ensure accountability: Assign clear ownership for system performance, ethical compliance, and stakeholder impact.

- Document and demonstrate trust: Maintain transparent processes and evidence for how AI systems are designed, tested, and deployed responsibly. Measure performance KPIs including model performance and reliability scores.

ISO 42001 moves responsible AI from principle to practice. When implemented well, it doesn’t slow innovation but accelerates adoption by giving regulators, partners, and customers something every AI system needs; proof of trustworthiness.

Bias Mitigation: Designing for Integrity

Every dataset tells a story, and some may be incomplete or distorted. Bias often enters long before a model is trained, through how data is collected, labelled or represented.

Operational readiness means creating structured review processes to identify, measure, and mitigate bias throughout the AI lifecycle. That includes diverse data sourcing, continuous validation, and routine audits. Bias can’t be eliminated entirely, but it can be managed continuously.

When handled well, bias management enhances accuracy and trust in the system’s outcomes. As an example, a company might implement an AI-driven recruitment tool to screen job applications. To ensure fairness, the organisation should conduct a structured review of the training data, identifying and removing features that could introduce bias (such as gender or ethnicity). They must also perform regular audits and validation checks. This would reduce the risk of discriminatory issues and demonstrates a commitment to equity and inclusion.

Explainability as a Default

Explainability means every decision made by a model can be traced, justified, and communicated in human-understandable terms. This doesn’t require exposing every algorithmic detail; it requires clarity about why an output occurred and how it was derived.

If your teams can’t explain a model’s decision, neither can your customers. Explainability by default ensures that intelligence remains interpretable and therefore, accountable.

A real-world scenario might involve a financial institution that deploys an AI model for credit scoring. To comply with regulatory requirements and build customer trust, the institution ensures that every decision made by the model can be traced and explained in human-understandable terms. They use explainability tools to highlight and capture which factors influenced each credit decision and provide clear justifications to applicants or for audit purposes.

Ethical Decisioning Frameworks

Not every decision can or should be automated. In high-impact or high-risk use cases, human oversight must remain integral. When oversight is built into workflows instead of just being written into policy, organisations ensure that humans remain the moral compass of intelligent systems.

Privacy and Security

Privacy and security are foundational to building trust in AI systems. Responsible AI design mandates that data protection and security controls are embedded from the outset. This includes implementing robust data loss prevention (DLP) policies, sensitivity labelling, and continuous monitoring to safeguard sensitive information. By prioritising privacy and security, organisations not only protect their reputation, but also strengthen the integrity and trustworthiness of their AI initiatives.

A healthcare provider, for example, might develop an AI system for patient diagnostics. From the outset, they embed robust data protection measures (as described above) to safeguard sensitive patient information. This avoids unnecessary data leakage and maintains a secure state while producing effective outcomes.

Continuous Testing, Lifecycle Management

Responsible AI is not a one-off achievement but a continuous journey. Continuous testing ensures that AI systems are rigorously validated at every stage, from development through to deployment and beyond.

Lifecycle management extends this discipline by embedding structured review processes throughout the AI lifecycle. This includes regular health checks, impact assessments, and updates to both technical and business strategies. Crucially, feedback loops are established to gather real-world insights, enabling iterative enhancements and continuous improvement. These loops ensure that AI systems remain effective and aligned with evolving organisational goals and stakeholder needs.

Cross-Functional Governance

Responsible AI cannot sit in a single department. It isn’t a function owned solely by data scientists, nor a policy led only by compliance. It’s a system of shared accountability that brings together technology, legal, compliance, risk, operations, and business leadership under a unified governance framework. Risk frameworks in organisations should extend to include AI initiatives and considerations.

Cross-functional governance ensures that technical innovation and organisational integrity evolve together, not in isolation. When cross-functional governance is done well, it builds strategic coherence. In short, governance becomes a bridge connecting what’s technologically possible with what’s ethically and strategically right.

A Cultural Shift, Not Just a Compliance Shift

Being Responsible by Design is as much about mindset as it is about method. It’s a shift from “Can we build it?” to “Should we build it and how do we build it responsibly?”

That shift starts with leadership. Executives must set the tone, embedding responsibility into vision and incentives. Middle managers must operationalise it in process. Technologists must express it in code and data.

Responsibility cannot be owned by one department. It must be part of the organisational norm.

The Future of AI: Built on Trust

AI’s potential is immense, but its promise depends on trust. Like a mirror, AI doesn’t invent reality, it reflects it. It shows us what we feed into it: our data, our choices and our biases. If the reflection is distorted, the problem isn’t with the glass but with what stands before it. Responsible AI requires looking critically at what we teach our systems and asking whether the image we’re producing is one we’re proud of. Embedding trust from the start means ensuring that what AI reflects back to society is accuracy, fairness, and inclusion.

Organisations that embed responsibility into their design process from day one will be the ones that scale safely, innovate sustainably, and lead confidently.

Let’s Build the Future Together

Ready to transform your business with solutions driven by empathy, excellence, and innovation?